Architecting for Performance on AWS

By David Vivsh and Shawn Jiang

Application performance is one of the key contributors to end-user experience. Learn the hidden impacts of performance issues and improvement options.

There’s little debate that application performance is one of the key contributors to end-user experience. If your application is slow, customers will not buy it (or will leave). For websites, even milliseconds matter. For example, Trainline reduced latency on their website by 0.3 seconds, leading to customers spending an extra NZ $15 million a year through their site. Staples saw a 10% increase in their conversion rate by improving the load time of their website by 1 second. The BBC calculated they lose an extra 10% of users for each extra second their site takes to load.

Hidden Impacts of Performance Problems

A slow-running application is a clear indication that there are issues in the codebase, the architecture, or the infrastructure. However, an application does not need to be running slowly for there to be underlying performance issues. The following are some of the hidden impacts of performance issues:

- Resources are scaled up: rather than implementing changes to code or architecture, a shortcut to improve performance is to deploy larger instance type/cost resources. So there doesn’t appear to be a problem with performance, as the application is running on larger instance type resources which masks the issue.

- High running costs: whilst a poorly performing application is likely to be running on scaled-up architecture (contributing to high running costs), it is also the case that it’s likely to need scaling out (onto additional instances) much more frequently too. Scaling out large instances can cause significant impacts on the overall running costs.

- Environment impacts: organisations such as Sustainable Web Designshare insights on how to measure and reduce CO2 impacts of poor-performing websites. Using large CPUs and sending large amounts of data across the Internet has a direct impact on CO2 emissions. Z Energy reported on how they reduced the size/data transfer of their website, which resulted in improved performance and a reduction in the average CO2 cost per pageview.

Performance Improvement Options

There are a number of steps to take once performance issues are identified. The first few steps include benchmarking your application and running controlled tests to understand where performance bottlenecks are. Identifying bottlenecks is an entire discipline in its own right, and is beyond the scope of this article. There are also countless ways to optimise a codebase to make it more performant, which is also beyond the scope of what we can look at here. Instead, the following focuses on some commonly used approaches to architect for performance.

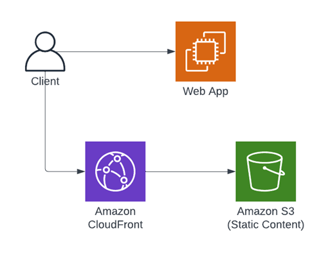

Use a Content Delivery Network (CDN)

If you’ve identified that your web frontend is struggling under load, a simple thing to consider is whether you could reduce the number of requests it needs to serve. A CDN is typically used to create copies of static content and store it in multiple locations around the world. Then, when users access your static content, it is served directly from the CDN which may be much closer to them, and the request is never made to your web application. Amazon CloudFront is a CDN service that integrates seamlessly with other AWS services and allows you to deliver content to your users with low latency and high transfer speeds.

Before

After

Moving static assets to a CDN reduces the number of calls a web application needs to serve. It also introduced the following benefits:

- Potentially, we can run at a smaller instance type for the web application, reducing cost

- Better performance for end user – CDN networks serve content from edge locations much closer to end users

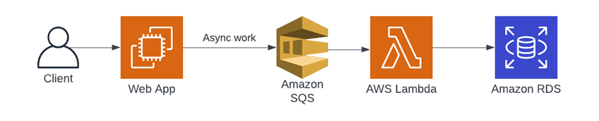

Implement the web-queue-worker pattern

Another pattern commonly used when web applications are dealing with high load is known as the web – queue – worker pattern. This pattern is useful when a web application has long-running requests to serve. Rather than waiting for the request to complete, this pattern suggests that the request should instead be put onto a queue, and the web application can return an “ok” response straight away.

Consider, for example, a web application that needs to process an import file. It could:

- Accept the request

- Process the data

- Write the data to a data store

- Respond with a success page

With the web-queue-worker pattern though, the alternative flow might be:

- Accept the request

- Put the request/data on a queue

- Respond with a success page

The end user would see (perhaps) a “processing” page, whilst in the background:

- A worker-process picks up the data from the queue

- The data is processed by the worker

- The worker writes the data to a data store

Depending on the approach of the web application, the user may then see some form of “completion” message.

Before

After

Implementing the web-queue-worker pattern can bring the following benefits:

- Response times from the web application are much lower, meaning more requests can be served – potentially allowing for a smaller instance type to be used

- End users see response pages much more quickly, giving the perception of a more responsive app

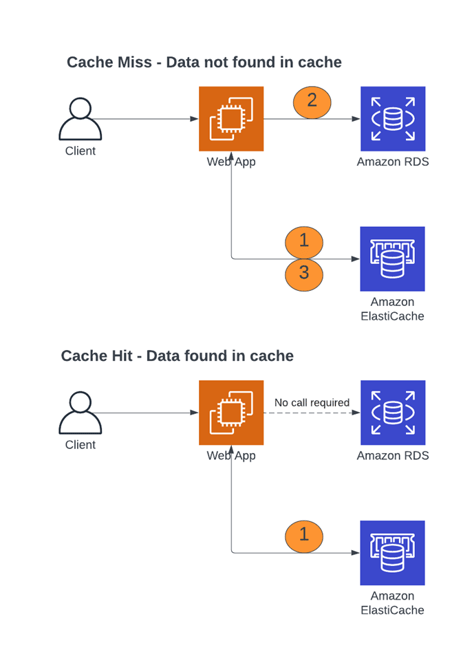

Cache-aside pattern

When the backend data store is identified as a potential bottleneck, there are patterns to reduce the number of calls it needs to service. One of these patterns is the cache-aside pattern. Using this pattern, an application first checks the cache for the data it needs. If the data is not there, the data store is then queried, with the result then being added to the cache. This means that subsequent requests can be served directly from the cache, rather than the data store. This pattern is very effective for static or infrequently changing data.

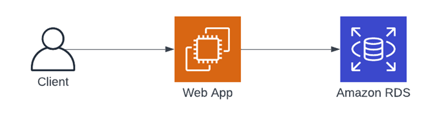

Before

After

Implementing the cache-aside pattern can bring the following benefits:

- Fewer calls to the main data store, which may decrease cost depending on the data store cost model

- Could potentially reduce the instance size of the RDS instance as it is doing less work

- Faster access to data – reading from a cache can be significantly faster than reading from RDS (depending on the complexity of the query)

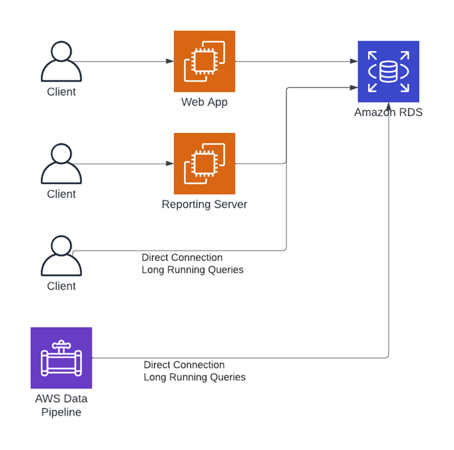

Make use of read replicas

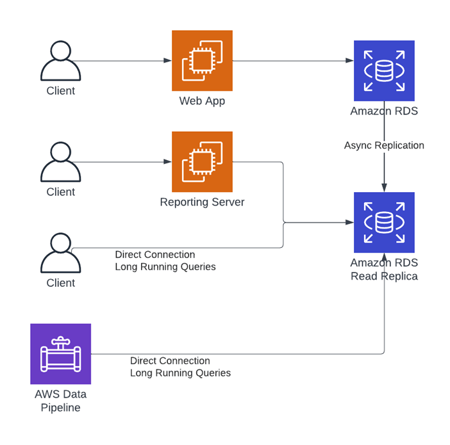

The final pattern being considered here is the read replica pattern. This is another pattern to consider if long-running queries on your database are causing performance bottlenecks. With the read replica pattern, a copy of your database is made available in read-only mode. This replica is kept up to date with the master database using asynchronous replication. The read replica can then be used to serve long-running reporting queries, rather than relying on the main application database.

Before

After

By making use of read replica, the following benefits might be seen:

- Better performance: less table locking/waits in SQL.

- Lower cost: can potentially reduce the instance size of SQL.

Whilst it may initially seem counter-intuitive, adding additional resources to your cloud solution can reduce costs whilst improving performance. The rule of thumb is: performance; if a resource has a lot of work to do, find a way for a different resource to do some of the work. The combined cost of running the two resources (potentially on smaller instance types) can be less than the cost of continuously scaling up a single resource.

When architecting your solutions to be performant, you can:

- Reduce costs

- Increase revenue

- Improve end-user experience

- Improve sustainability

If you want to understand how you can achieve these results for your business, get in touch with our team today.